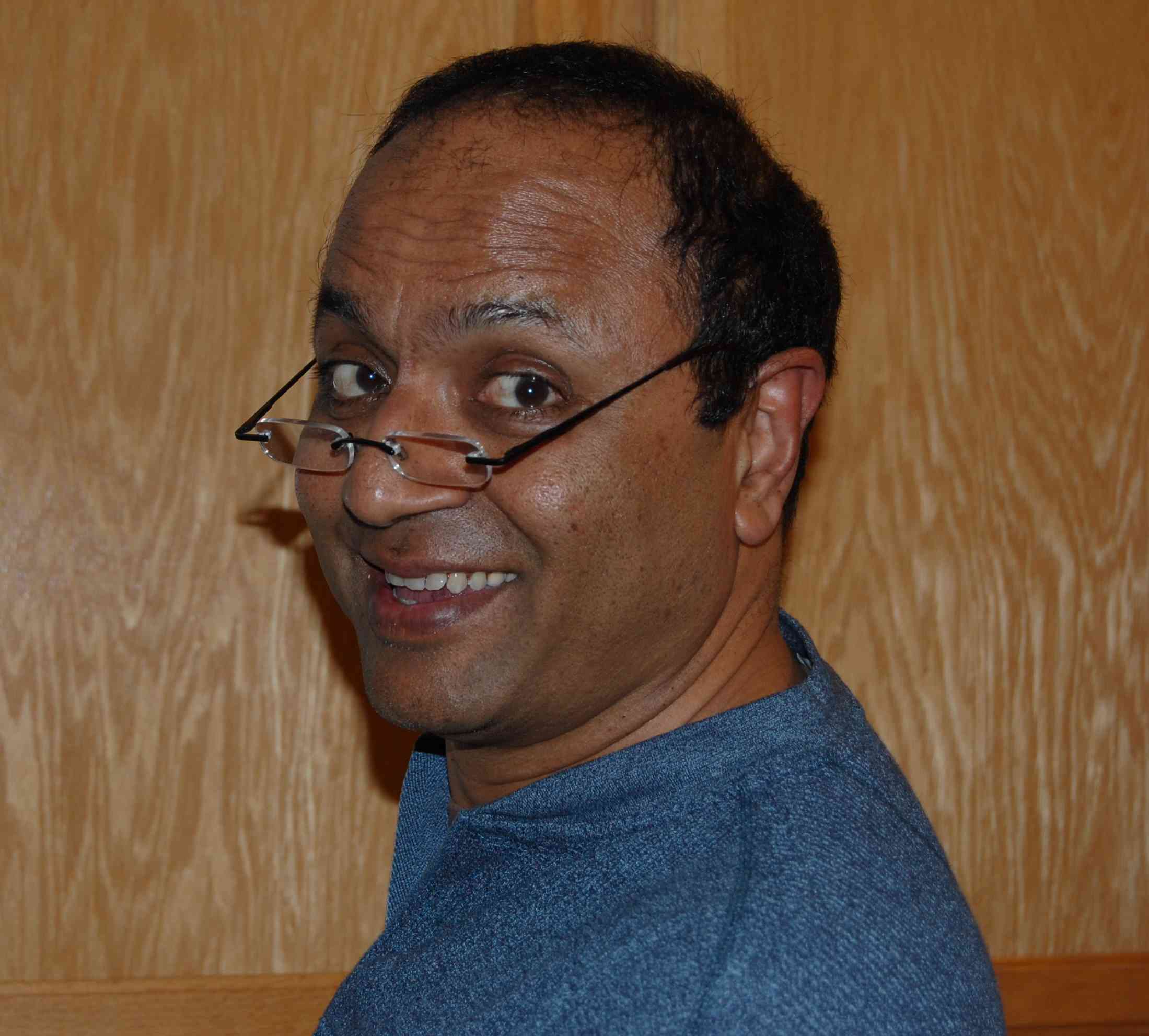

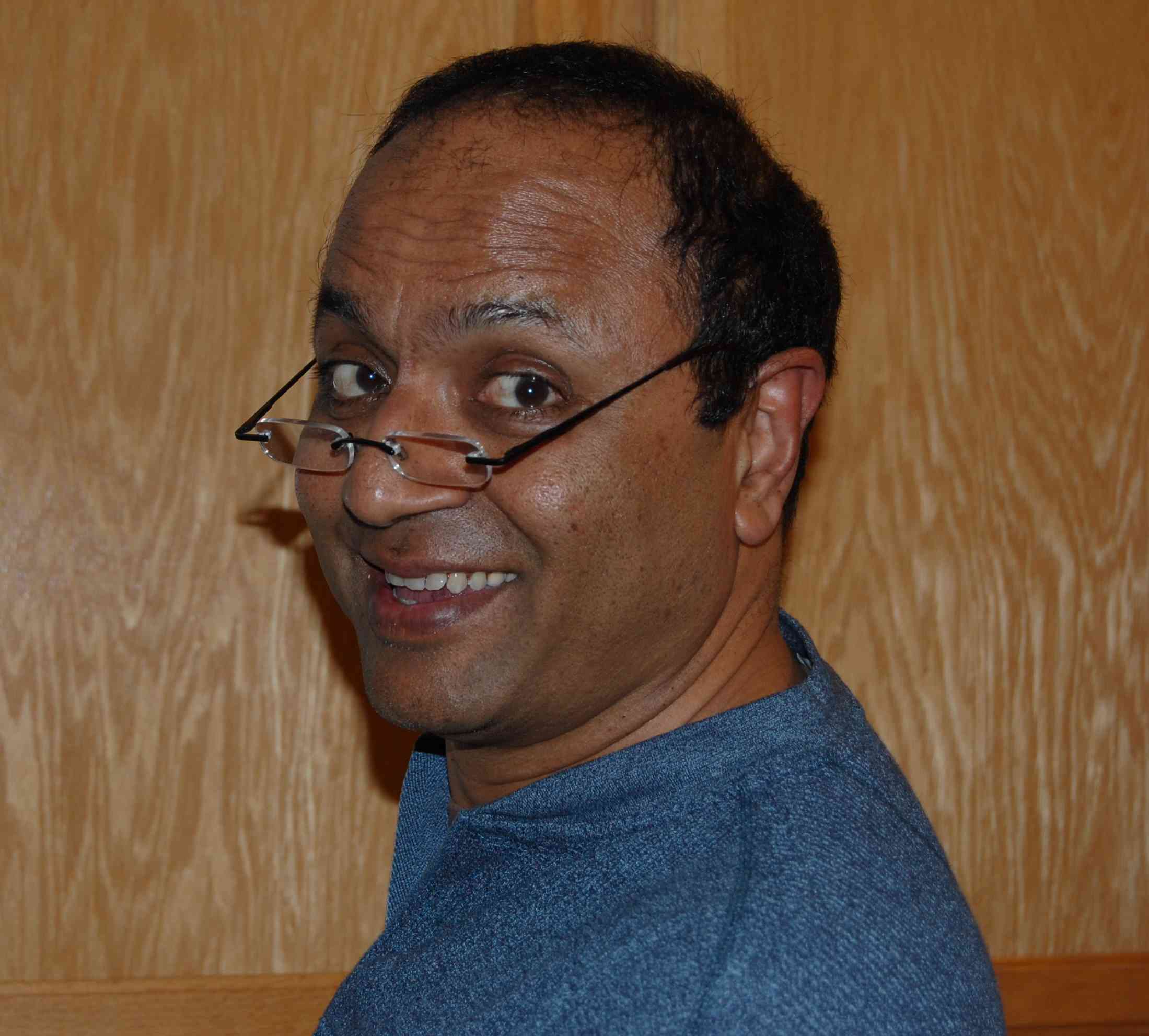

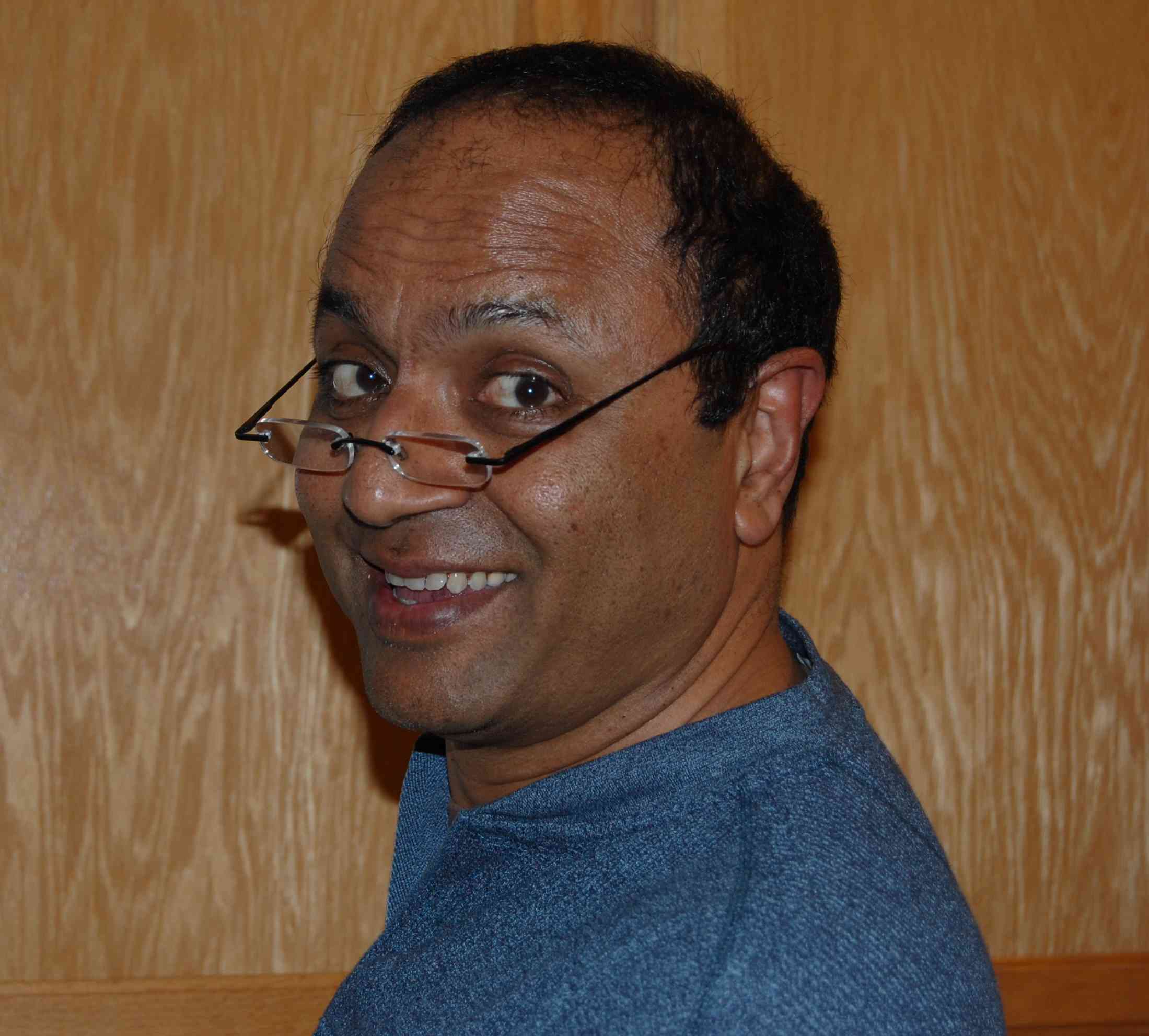

Prem Devanbu

On the Naturalness of Software, and how to exploit it for Fun and Profit.

Abstract

Programming languages, like their "natural" counterparts, are rich,

powerful and expressive. But while skilled writers like Zadie Smith,

Umberto Eco, and Salman Rushdie delight us with their elegant, creative

deployment of the power and beauty of natural language, most of what us oridnary

mortals say and write everyday is Very Repetitive and Highly Predictable.

This predictability, as most of us have learned by now, is at the

heart of the modern statistical revolution in speech recognition,

natural language translation, question-answering, etc. We will argue

that in fact, despite the power and expressiveness of programming

languages, most <> in fact is <> quite repetitive and

predictable, and can be fruitfully modeled using the same types of

statistical models used in natural language processing. There are numerous

and exciting applications of this rather unexpected finding.

This insight has led to an international effort, with numerous projects in the US, Canada, UK,

Switzerland, and elsewhere. Many interesting results have been obtained. This tutorial

is a practitioners' introduction to the basic concepts of Statistical Natural Language

Processing, and current results, for Software Engineers who want to learn about this

exciting and rapidly developing area.

Speaker's Bio

Prem Devanbu received his B.Tech from the Indian Institute of Technology

in Chennai, India, before you were born, and his PhD from Rutgers University

in 1994. After spending nearly 20 years at Bell Labs and its various

offshoots, he escaped New Jersey traffic to join the CS faculty at

UC Davis in late 1997. For almost a decade now, he has been working at

ways to exploit the copious amounts of available open-source project data

to bring more joy, meaning, and fulfillment to the lives of programmers.

|